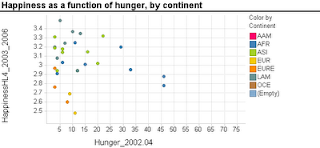

A common type of research question in such studies is about the relationship between two variables. For example, how does the final price of an online auction relate to the seller's feedback rating? A classic exploratory tool for examining such questions (before delving into formal data analysis) is the scatter plot. In small sample studies, scatter plots are used for exploring relationships and detecting outliers.

| Image from http://prsdstudio.com/ |

Here are some large-sample strategies to make scatter plots useful:

- Aggregation: display groups of observations in a certain area on the plot as a single marker. Size or color can denote the number of aggregated observations.

- Small-multiples: split the data into multiple scatter plots by breaking down the data into (meaningful) subsets. Breaking down the data by geographical location is one example. Make sure to use the same axis scales on all plots - this will be done automatically if your software allows "trellising".

- Sample: draw smaller random samples from the large dataset and plot them in multiple scatter plots (again, keep the axis scales identical on all plots).

- Zoom-in: examine particular areas of the scatter plot by zooming in

Finally, with large datasets it is useful to consider charts that are based on aggregation such as histograms and box plots. For more on visualization, see the Visualization chapter in Data Mining for Business Intelligence.